Can I make my own custom search engine from scratch?

The title is quite intriguing but interesting. As a student of computer science I had always thought how a search engine works, it knows about a newly commissioned web resource, whether a website is up or down, why a website takes time to show-up in search results? There are many questions, but the only answer is search engine. This is first article in series of search engine which will cover the basics, modules of search engine, development of the modules with code and results, integrating the modules with one framework.

We all use search engine daily like

ok Google when cab is going to arrive, when is my birthday?

Alexa will it rain today?

Search engines like Google and duckduckgo do same things, but ideologically different. So lets get down to basics of search engines and get our hands dirty with coding a basic search engine.

Basics

Search engines are a complex piece of code which does so many things like searching for the answer to the question user had asked, calculating which website comes in first 10 results. To explain we will now first understand different components of search engine and they are:

- crawling

- parsing

- indexing

Crawling

Crawling is a process which feeds the search engine with the required data. It first visits the page through internet, downloads the information and saves it to the database of the search engine. Some of the information a crawler seeks for are:

- title of the website

- url

- keywords

- meta information

- domain information

- javascript

- CSS

- headings

- other links in the website

Now I will make you understand the next process i.e., parsing.

Parsing

It is a process in which the webpage is scanned for the content inside it.

So how do we do it?

We do it by extracting the features from the webpage and store it with the handle i.e, web url. Some of the features of the websites are:

- website domain name

- website title

- frequently used tokens(words)

- headings on the page

- links to other websites

- links to other pages of the site

- text marked as bold

- thumbnails of images in website

Indexing

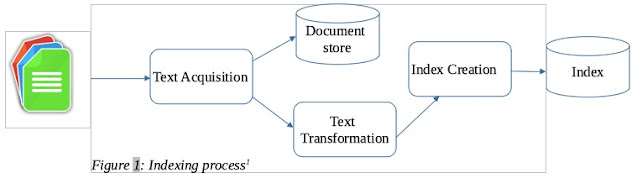

It is a process of processing the data of the crawled websites and arranging them in an order, so that we can retrieve required information later. It is described in the figure 1 below.

In the Figure 1, text acquisition is done in parsing phase and data is stored in document store, text transformation is done which cleans text and then pushed again for index creation. Index creation is done after it.

I hope you have understood the basics of search engine.

In my next article I’ll explain each module in detail.

Comments

Post a Comment